Hey everyone,

This week’s module focuses on “Generative AI” and “Data Visualization”.

Generative AI, of course, is the hot topic of the year, and something I’m pretty familiar with at this point. Earlier this year when everyone was talking about “AI art”, I was there with them – trying out all of the new tools such as Midjourney, Stable Diffusion, and DALLE mini (if I remember correctly, DALLE-2 was invite-only around this time.) ChatGPT, at least the earlier models was also something I experimented with but didn’t use all too frequently. However, that’s where I stopped – I haven’t used any of these tools for at least half a year now, so having an opportunity to explore what has changed, and what has emerged on to the scene, was rather exciting.

Data visualization is also something I’m familiar with, but it was still extremely interesting to go over the various ways to represent data. The accessibility part of data was the most interesting for me. I’m slightly colourblind myself, so it was great to see an example listed showing how to use proper colour palettes to accommodate for colourblindness.

Generating music through AI

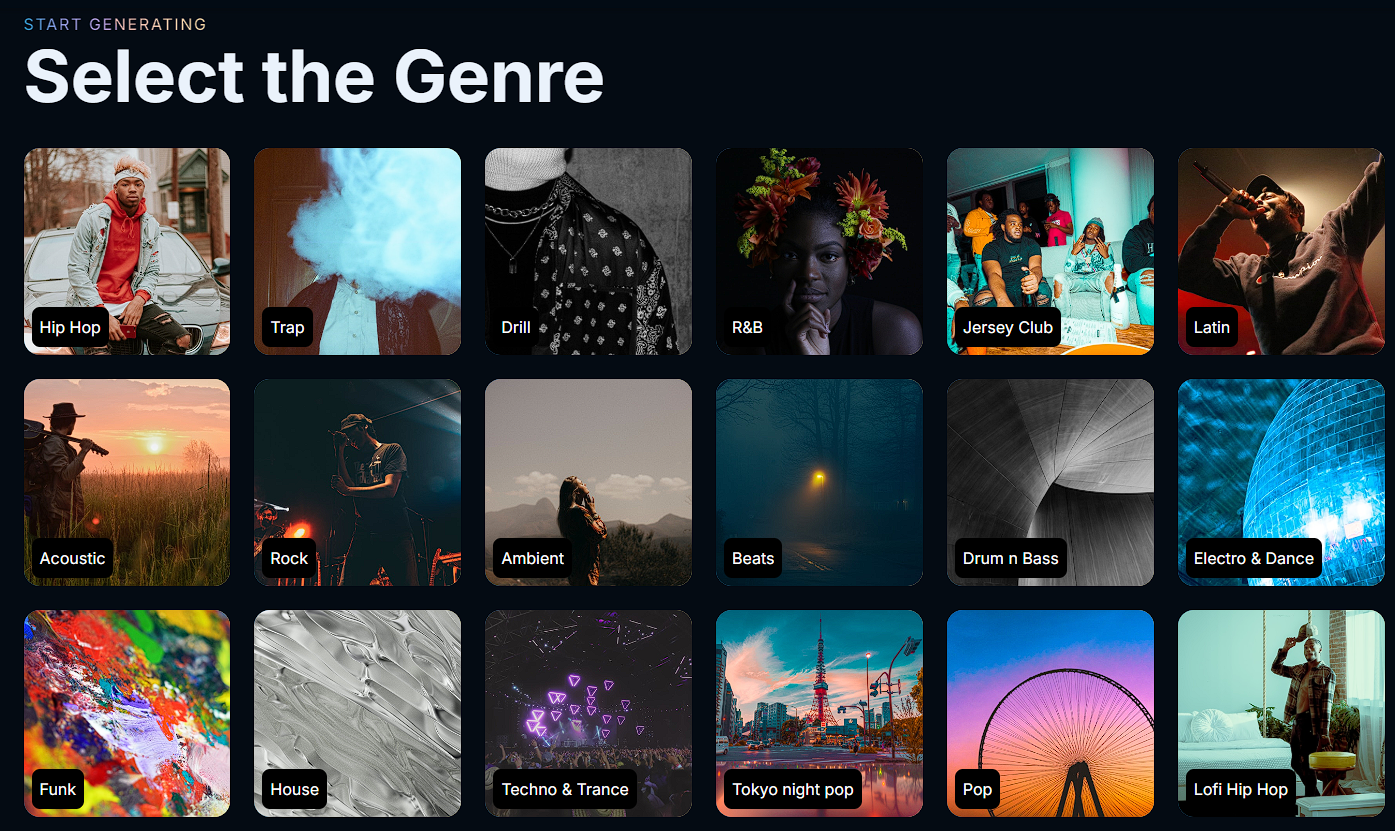

Generating text and images has already been explored extensively by millions (including myself), so I thought it would be better to start with something I wasn’t really aware of: using AI to generate music. I was unfamiliar with both of the tools listed, so I just chose whichever appeared to be easier to use, which was SOUNDRAW. Initially, I was amazed at the vast array of genres that they had!

Screenshot of SOUNDRAW’s library of genres

“Tokyo night pop” sounded pretty interesting, so I decided to start with that. They had multiple different themes and moods to choose from, you could alter the length of the song, and even choose which instruments you wanted present in your song. After clicking around, selecting what I liked, it then generated 15 different songs to listen to! Pretty cool. Unfortunately, it suffers from the same issue as AI generated imagery: it’s completely soulless. The “music” it gives you is so bland, so uninspired, and it all sounds the exact same. It really drives home how important the “human touch” factor is in any form of art, especially music. Nevertheless, I generated a link to one of the songs I created so that you can listen to it (SOUNDRAW, 2023).

Creating slideshows with AI

Next, it was onto another tool: Tome. With Tome, you can generate slideshows using prompts, which on the surface sounds like a fantastic idea! Presentations can feel extremely draining to create, so being able to use AI to generate them for you is a pretty neat use case. To test this, I went with an extremely simple prompt: “cats”. It generated a history of cats, different breeds, famous cats, and so on, and turned this all into a slideshow. It also allowed me to choose the individual layouts for each slide, which was nice. Unfortunately, the layouts are all pretty much the same, and falls into the same general issue of AI generating pretty bland things. Also, it’s not really a good slide show – it generates way too much text. It does give you options to edit what it has generated, which is nice pretty nice but if I’m generating a slideshow using AI, I presumably wouldn’t be that interested in doing large amounts of editing to fix the layout issues. Anyways, the slideshow can be found here (Tome, 2023).

Text to video with the power of AI

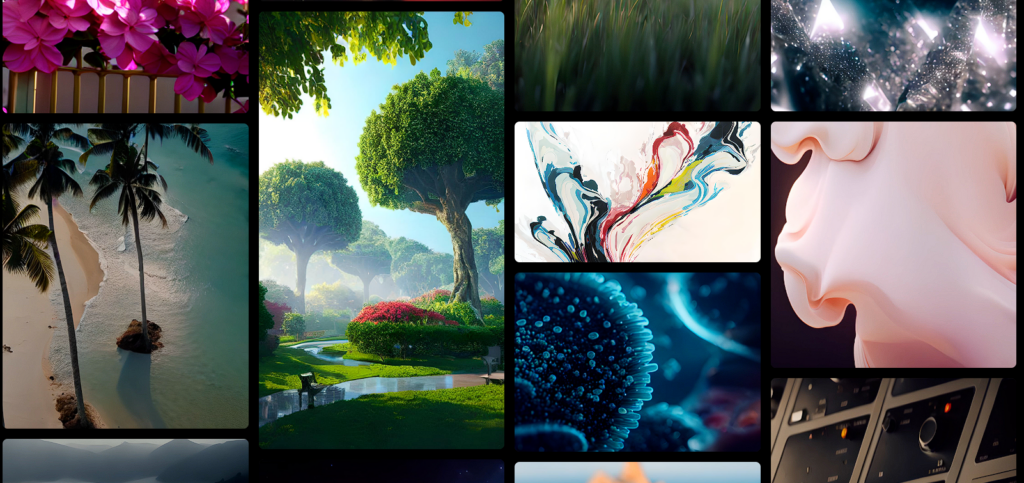

Finally, we’re exploring Runway.ml. This tool has an expansive suite of features, such as generating videos, editing videos, turning images into video, all powered with AI. As with most tools here, they offer a gallery of examples upon first opening the app:

Screenshot of Runway’s gallery of example videos.

Intrigued by the tool that allows you to turn images into video, I tested it out. For this, I generated an image using Stable Diffusion:

“Art of a waterfall in the middle of a forest in the style of Studio Ghibli,” (Stable Diffusion, 2023).

Then, I tossed this image into Runway’s video generator, and ran it a couple times. Here’s the result (Runway, 2023). Obviously, this isn’t the only usage of Runway – there’s much more to it, including some very neat features available to edit video and audio (such as blurring faces, automatically adding subtitles, cleaning up audio by removing background noise). I think out of the tools explored today, this is probably the most useful, just for the video editing suite alone.

The evolution of AI tools

I think for the most part, I was pretty disappointed at the abilities of the tools I explored. However, that will not always be the case. I believe that with the correct resources and the high levels of attention that AI is receiving right now, this technology will grow exponentially. In 2-3 years, the music generated might not be soulless and bland! The images generated will be even higher quality, and ChatGPT (if it still exists at that point, it seems like the company is going through a bit of a rough spot…) will be even more powerful than it is now.

Using AI to create an inclusive learning experience

For most of the tools explored, they have practically no use in creating an inclusive learning experience. However, there is one tool that I think is amazing for this purpose: ChatGPT. At least for introductory courses, ChatGPT can act as a “professor on demand”, and answer any questions you might have about (simple) topics. It could also generate exercises for a topic depending on the learners level of understanding (e.g., if the learner isn’t that experienced with a topic, it’ll give easier exercises, and could also explain to the learner step by step how to go through certain exercises).

I personally believe the “Medieval Plague Simulation” is also a good way to create an inclusive learning environment. For some learners, they may not understand scenarios super well, so having an ability to “go back in time” and do a simulation like that would help a whole new group of learners.

Of course, there are some groups who can be excluded by the usage of AI. I believe that would be mainly people who do not have an easy way to access this technology.

Final thoughts

I think it was very interesting to view the current state of AI. Unfortunately, most generative AI (outside of text) tends to be very bland and uninspired. I do think this is part of the growing pains of AI, as we are experiencing a relatively new era with this technology. As these models are trained and improved upon, they’ll be much more interesting and useful in the types of content that they generate.

References

Runway. (2023, November 22). [Runway’s generated video in response to an image prompt]. https://runwayml.com/

SOUNDRAW. (2023, November 22). [Generated audio produced by SOUNDRAW in response to the prompt, “Tokyo City Pop”]. https://soundraw.io/

Stable Diffusion. (2023, November 22). [Generated image in response to a prompt asking to create artwork of a forest waterfall in the style of Studio Ghibli]. https://stablediffusionweb.com/

Tome. (2023, November 22). [Generated slideshow in response to a prompt asking to create a presentation about cats]. https://tome.app/

Leave a Reply

You must be logged in to post a comment.